With the rapid evolution of large vision-language models (VLMs), a natural question emerges: what’s the best OCR solution available right now?

In particular, there’s been buzz around Mistral’s OCR capabilities, released over a month ago. But does it live up to the hype?

OCR is Still a Hard Problem

While Mistral OCR is an impressive achievement, OCR itself remains a challenging domain. Accurately recognizing text — especially in noisy, structured, or complex documents — pushes the limits of many models. And when OCR is powered by LLMs, there’s always the risk of:

- Hallucinations (fabricating content that isn’t there)

- Dropped text

- Poor table structure parsing

Benchmarks: Mistral vs Gemini Flash 2.0

Two recent benchmarks shed some light on how current models compare in real-world document parsing tasks.

🔍 Reducto.ai Benchmark

Reducto.ai conducted an in-depth benchmark comparing Mistral OCR and Gemini Flash 2.0. You can read the full write-up here:

👉 https://reducto.ai/blog/lvm-ocr-accuracy-mistral-gemini

Key takeaway:

While Mistral performs admirably, it underperforms compared to Gemini Flash 2.0 — especially in areas like structured document parsing, table reconstruction, and overall OCR fidelity.

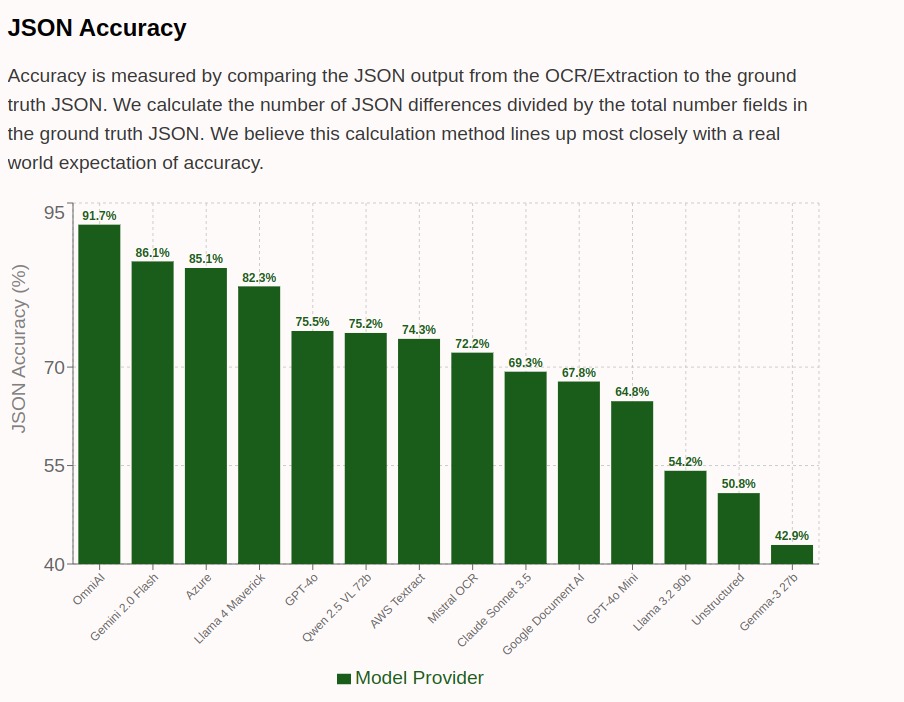

📊 GetOmni.ai Results

GetOmni.ai published their own benchmark, comparing Gemini 2 Flash, Azure OCR, and Mistral. Here’s how the models stacked up:

Full results here:

👉 https://getomni.ai/ocr-benchmark

Final Thoughts

Mistral OCR is a step forward, but the benchmarks suggest it’s not quite on par with the current state-of-the-art in real-world OCR performance. Hallucinations, dropped text, and poor structure parsing still pose real challenges.

For production-grade OCR — especially when accuracy matters — Gemini Flash 2.0 and Azure OCR currently lead the pack.

Curious what you’re using for OCR in production today — and if you’ve seen similar results. Reach out on LinkedIn, or share your thoughts below.

#OCR #AI #Mistral #Gemini #Azure #Benchmarks #VisionLanguageModels #LLM #DocumentAI