Living in the EU, I’ve had the opportunity to observe the rapidly evolving landscape of artificial intelligence regulation. In this post, I’ll explore the current state of AI law, focusing particularly on the EU’s pioneering AI Act and its implications for businesses and individuals alike.

This blog post is based off lectures presented at WWSI 2025 in Warsaw, Poland, as part of their Artificial Intelligence - Machine Learning Engineering postgraduate program.

What is Artificial Intelligence?

In essence, AI represents the ability of machines to perceive their environment, process information, learn from data, and take actions that maximize their chance of achieving specific goals.

The concept dates back to the famous Turing test, which proposed that if a machine can engage in conversation with a human without the human realizing they’re speaking with a machine, then the machine exhibits intelligence. While the Turing test has its limitations, it provides a conceptual framework for understanding artificial intelligence.

We’ve seen AI’s capabilities grow dramatically over time - from IBM’s Deep Blue defeating chess champion Garry Kasparov in 1997 to today’s sophisticated language models that can generate human-like text, images, and even code.

The Growing Impact of AI Across Sectors

Artificial intelligence is transforming virtually every sector of our economy:

- Healthcare: AI is enabling personalized therapies, diagnostics, and monitoring of physiological processes

- Finance: Credit scoring, fraud detection, and investment strategies increasingly rely on AI

- Manufacturing: Process optimization, predictive maintenance, and robotics

- Retail: Personalized recommendations, inventory management, and customer service

- Transportation: Autonomous vehicles, traffic management, and logistics optimization

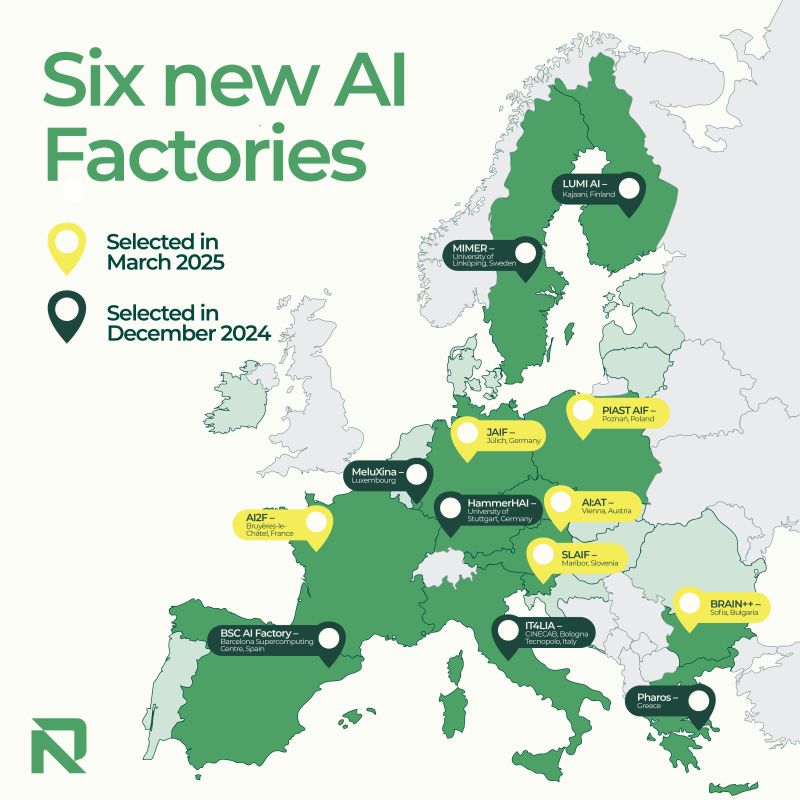

The European Commission has recently highlighted the development of “AI factories” in key sectors across member states. In Poland, the “Piast” AI factory has been established at the Poznań Supercomputing and Networking Center (PCSS), focusing on medical, technological, and digital applications. This initiative is funded with €50 million from the European Commission and an additional 340 million PLN from the Polish government, aiming to accelerate AI adoption in sectors including health and life sciences, IT and cybersecurity, space and robotics, sustainability, and the public sector12.

The Need for Regulation

With AI’s growing influence comes important questions: Should AI be regulated? If so, how? And who should bear responsibility when AI systems cause harm?

There are several compelling reasons why regulation is necessary:

- Protection of fundamental rights and values: Ensuring AI systems don’t discriminate or infringe on human dignity

- Data privacy concerns: AI systems often process enormous amounts of personal data

- Safety and security: Preventing harmful applications of AI

- Liability clarity: Establishing who’s responsible when AI causes harm

- Consumer protection: Ensuring transparency when interacting with AI systems

These concerns align with the objectives stated by the European Union and other regulatory bodies globally34.

Different Regulatory Approaches Worldwide

The European Approach: The AI Act

The European Union has taken the lead with the AI Act (Regulation (EU) 2024/1689), formally published on July 12, 20245. This comprehensive regulation aims to protect users and fundamental rights while enabling innovation.

Key aspects of the EU approach include:

- Risk-based categorization of AI systems

- Prohibition of specific AI practices

- Transparency requirements

- Compliance obligations for high-risk AI systems

- Regulatory sandboxes for testing

The regulation sets harmonized rules for AI development, marketing, and use within the EU, focusing on human-centric, trustworthy AI that respects European values64.

The American Approach

The United States has taken a more fragmented approach. Under President Biden, an executive order was issued in October 2023 to ensure safe, certain, and trustworthy development of AI. However, this order was revoked by President Donald Trump in January 2025 through an executive order titled “Removing Barriers to American Leadership in Artificial Intelligence”78.

Currently, there are no comprehensive federal regulations in the US. Instead, we see state-level initiatives:

- The Colorado AI Act, passed in 2023, is the first comprehensive state-level AI legislation

- California has enacted various AI-related laws focused on transparency, disclosure requirements, and risk management9

The Chinese Approach

China doesn’t yet have a unified AI regulation equivalent to the EU’s AI Act, but it’s in the works. The State Council’s 2023 legislative plans indicated the development of comprehensive regulation in coming years.

Currently, AI regulations come from the Cyberspace Administration of China, which issues binding recommendations for content providers and users. In July 2023, China finalized its “Measures for the Administration of Generative Artificial Intelligence Services,” which regulate the development and use of generative AI systems10. Starting September 2025, all AI-generated content in China will need to be clearly marked - similar to the transparency requirements in the EU’s AI Act11.

Implementation Timeline of the AI Act

The AI Act entered into force on August 1, 2024, but different provisions have varying application dates:

- February 2, 2025: General provisions and prohibited practices (Chapters 1 and 2) became applicable

- August 2, 2026: Most provisions will apply, including compliance requirements and penalties

- August 2, 2027: Full application including provisions related to high-risk systems

The European Commission has published detailed guidelines to help organizations determine whether they’re using AI systems as defined by the Act and to clarify what constitutes prohibited practices12.

Key Considerations for Organizations

When examining whether your IT system falls under the AI Act’s scope, consider these factors:

-

Does it meet the definition of an AI system? The Act defines AI systems as those designed to operate with varying degrees of autonomy and can show adaptability by learning based on data.

-

What is excluded? Systems for mathematical optimization, basic data processing following pre-specified instructions, classic heuristics, and simple predictive systems using elementary statistical rules are excluded.

-

What’s the risk level? The Act categorizes AI systems based on risk levels, with different obligations for each category.

-

Testing considerations: Testing AI systems under real conditions may still fall under the Act’s scope. For example, testing facial recognition software in public spaces requires compliance with the Act unless conducted in controlled laboratory conditions or a regulatory sandbox13.

Regulatory Sandboxes

For organizations looking to test innovative AI solutions, regulatory sandboxes offer a controlled environment where certain requirements may be relaxed. These sandboxes allow for experimentation while maintaining oversight.

Article 57 of the AI Act establishes the framework for these AI regulatory sandboxes, enabling the testing and development of innovative AI systems under regulatory supervision before they are placed on the market14. These environments are designed to foster innovation while ensuring compliance with the Act’s requirements.

The frameworks for these sandboxes are still being developed across EU member states, including Poland, which is addressing these frameworks in its upcoming law on artificial intelligence15.

Liability Questions

One of the most challenging aspects of AI regulation concerns liability. Who bears responsibility when an AI system causes harm? Is it the developer, the deployer, or the user?

While the AI Act focuses primarily on risk prevention and compliance, separate work on AI liability had been underway through a proposed AI Liability Directive. However, in early 2025, the European Commission withdrew this directive due to lack of agreement, shifting focus to the implementation of the AI Act1617.

For now, organizations must rely on existing liability frameworks:

- The entity deploying an AI solution bears primary responsibility

- Recourse liability may allow this entity to seek compensation from the solution provider if design flaws are found

- The distinction between “providers” (who develop and market AI systems) and “deployers” (who use them) is crucial for determining liability18

As noted by legal experts, this creates a chain model of liability where responsibility may flow from the user of an AI system to its developer, depending on the specific circumstances19.

Conclusion

The AI Act represents the world’s first comprehensive regulation of artificial intelligence. While some argue it may restrict innovation, it provides important protections for citizens and clarity for organizations deploying AI.

As we navigate this new regulatory landscape, organizations should:

- Assess whether their systems fall under the AI Act’s definition of artificial intelligence

- Identify prohibited practices and ensure compliance with applicable requirements

- Monitor ongoing developments in implementation guidelines

- Consider utilizing regulatory sandboxes for testing innovative solutions

The field of AI law will continue evolving rapidly. By staying informed and proactive, organizations can ensure compliance while continuing to innovate responsibly.

References

This blog post is based on information available as of April 2025 and provides a general overview. It is not legal advice. Always consult with qualified legal professionals for specific guidance related to AI regulations and compliance.

-

Research in Poland: Six new AI factories to launch in Europe, one in Poznań ↩

-

European Commission announcement of AI factories including Poland’s Piast AI factory ↩

-

The White House: Removing Barriers to American Leadership in Artificial Intelligence ↩

-

Reuters: Trump revokes Biden executive order addressing AI risks ↩

-

JD Supra: California’s AI Laws Are Here. Is Your Business Ready? ↩

-

Library of Congress: China: Generative AI Measures Finalized ↩

-

LinkedIn: China Mandates Labels for All AI-Generated Content ↩

-

Digital Policy Alert: Draft Act on Artificial Intelligence Systems in Poland ↩

-

Babl AI: EU Withdraws AI Liability Directive, Shifting Focus to EU AI Act Compliance ↩

-

Stephenson Harwood: The roles of the provider and deployer in AI systems and models ↩

-

Taylor Wessing: AI Liability: Who is accountable when artificial intelligence malfunctions? ↩